Splitting Data with the Split Data Task

The Split task divides the dataset into three subsets of patterns:

the training set, used to build the model,

the test set, used to assess the accuracy of the model and

the validation set, used for tuning the model parameters. The validation set is not mandatory.

Prerequisites

the required datasets have been imported into the process

the data used for the model has been well prepared

a single unified dataset has been created by merging all the datasets imported into the process.

Additional tabs

The following additional tabs are provided:

Documentation tab where you can document your task,

Parametric options tab where you can configure process variables instead of fixed values. Parametric equivalents are expressed in italics in this page.

Procedure

Drag and drop the Split Data task onto the stage.

Connect the task that contains the dataset you want to split to the Split Data task.

Double click the Split Data task.

Configure the split options as described in the table below.

Save and compute the task.

Split options | ||

|---|---|---|

Name | PO | Description |

Number of training patterns | trainsize | Indicate the number of training patterns, either as an absolute number of patterns or as a percentage of the overall dataset, which will be used to create the training set. These patterns are used to build the model. |

Starting pattern for training set | trainstart | Indicate the starting point for the training set pattern either as an absolute value, or as a percentage of the whole. This option is valid only if No Shuffle is selected as the Data reshuffle policy. |

Number of test patterns | testsize | Indicate the number of test patterns, either as an absolute number of patterns or as a percentage of the overall dataset, which will be used to create the test set. These patterns are not used to build the model. |

Starting pattern for test set | teststart | Indicate the starting point for the test set pattern either as an absolute value, or as a percentage of the whole. This option is valid only if No Shuffle is selected as the Data reshuffle policy. |

Number of validation patterns | validsize | Indicate the number of validation patterns, either as an absolute number of patterns or as a percentage of the overall dataset, which will be used to create the validation set. The validation patterns cannot be used to create or test the model and are not mandatory as they are are used only for internal validation by some modeling methods. |

Starting pattern for validation set | validstart | Indicate the starting point for the validation set pattern either as an absolute value, or as a percentage of the whole. This option is valid only if No Shuffle is selected as the Data reshuffle policy. |

Data reshuffle policy | shuffletype | Indicate the required Data shuffle policy from the drop-down list:

|

Initialize random generator with seed | initrandom, iseed | Select Initialize random generator with seed if you want to set the seed for the random generator. This may be useful to make each execution reproducible. Otherwise, each execution of the same task (with same options) may produce dissimilar results due to the different random numbers generated to define training/test/validation sets. |

Example

The following examples are based on the Adult dataset.

Scenario data can be found in the Datasets folder in your Rulex installation.

The following steps were performed:

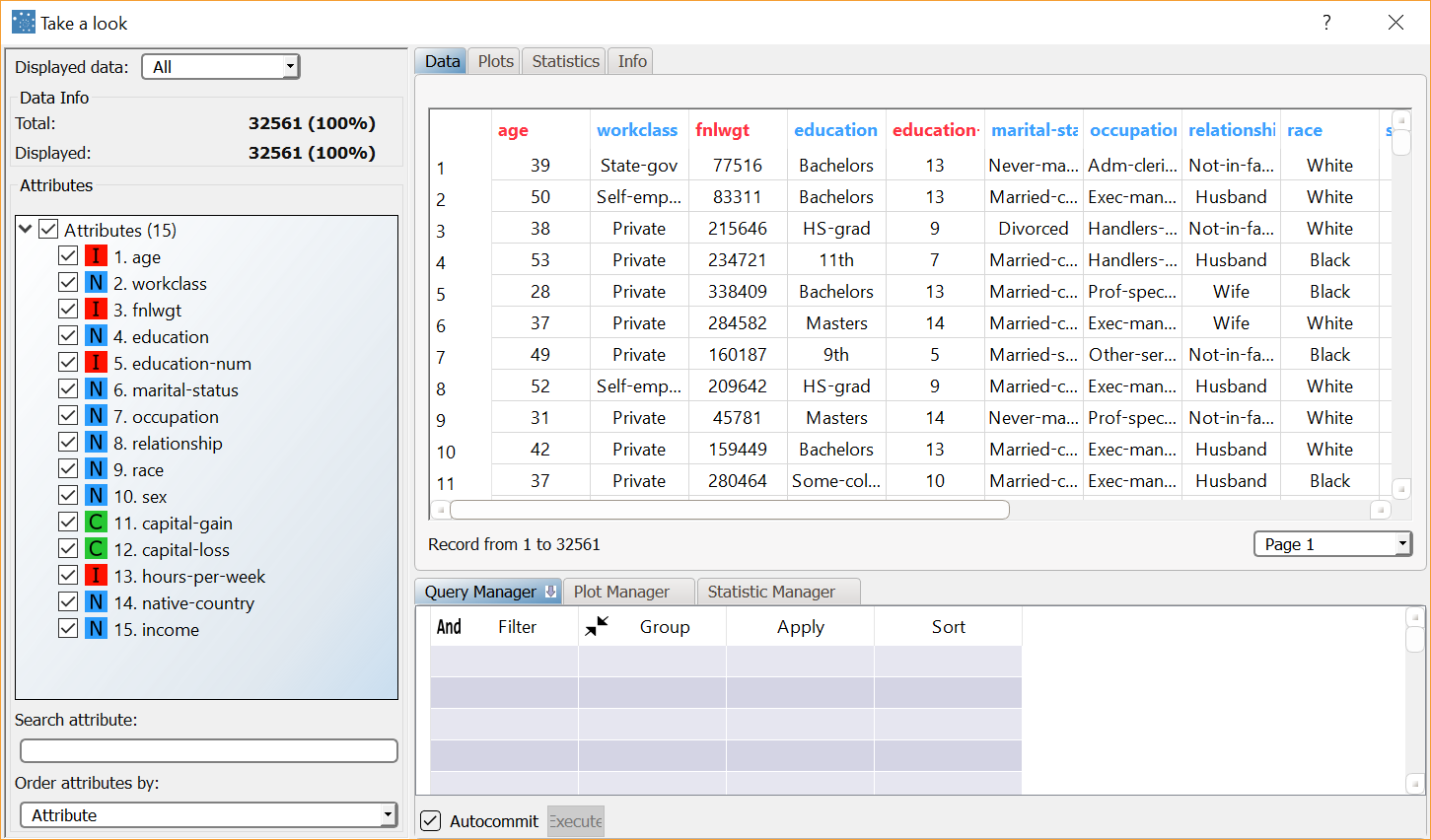

We use the Take a look function to visualize the initial data.

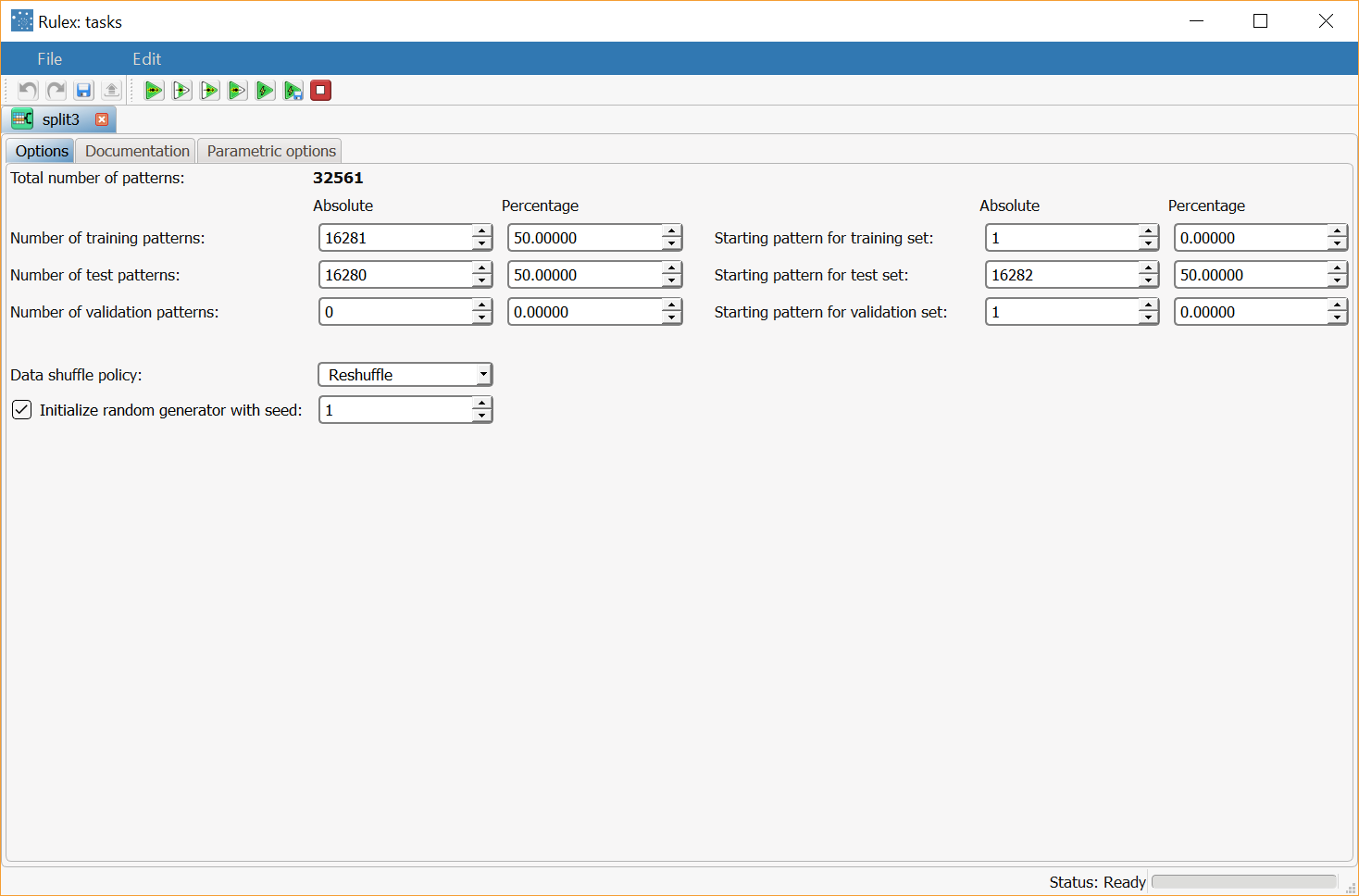

We then add a Split Data task to create the training and test sets.

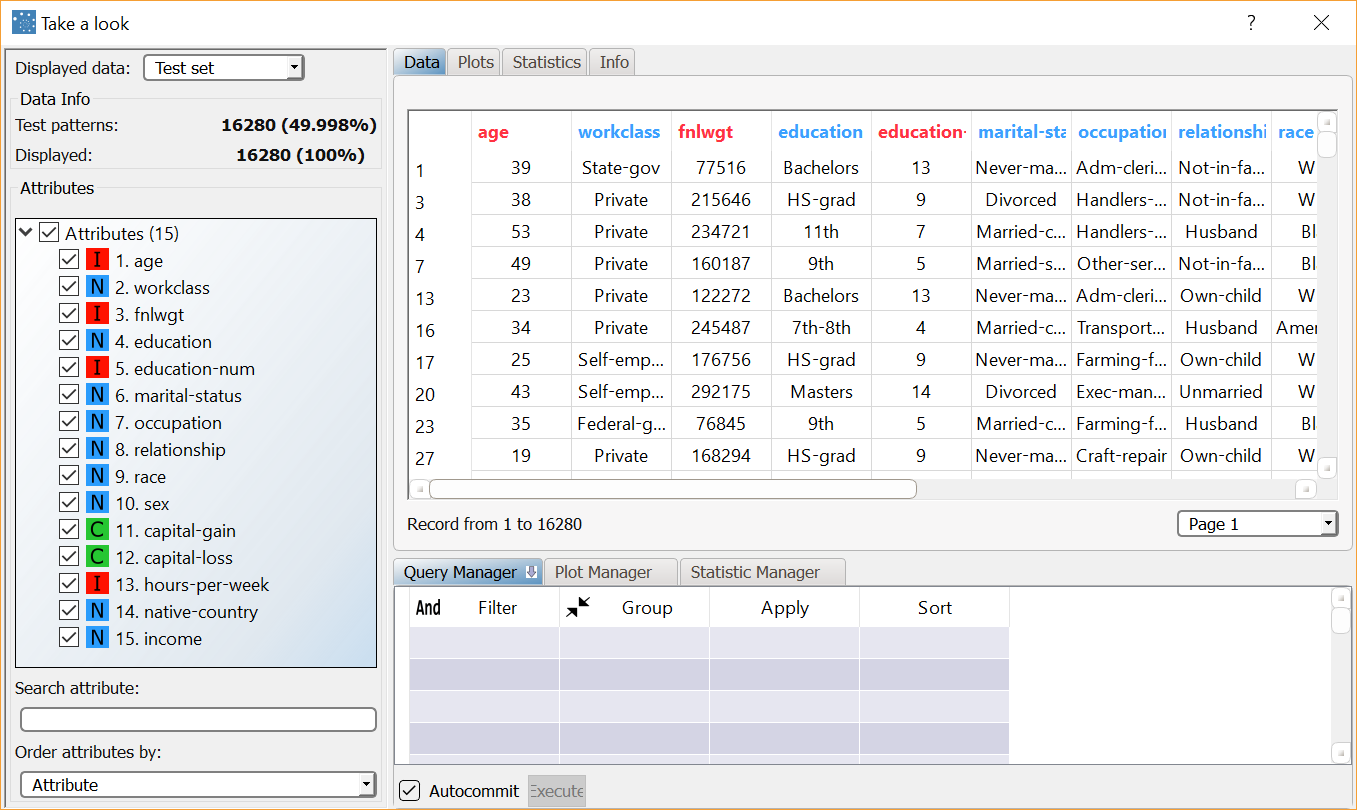

We finally use the Take a look function again to visualize the results.

Procedure | Screenshot |

|---|---|

After importing the adult.set dataset via an Import from Text File task, right-click the task and select Take a look to display the imported data. The original dataset contains 32561 patterns. | |

We want to divide the data from the source as follows:

We do not need a validation set. | |

Right-click the Split Data task and select Take a look to display the resulting data. We can see that the split operation has divided the data into:

|